This article builds upon my previous post, plus the existing white papers and blogs listed below, to provide a VCDX-style guide to the design decisions that need to be considered for Enterprise-level Network Attached Storage (NAS) with vSphere 5.1 and the EMC Symmetrix VMAX 20K with VNX VG8 NAS Gateways.

This article builds upon my previous post, plus the existing white papers and blogs listed below, to provide a VCDX-style guide to the design decisions that need to be considered for Enterprise-level Network Attached Storage (NAS) with vSphere 5.1 and the EMC Symmetrix VMAX 20K with VNX VG8 NAS Gateways.

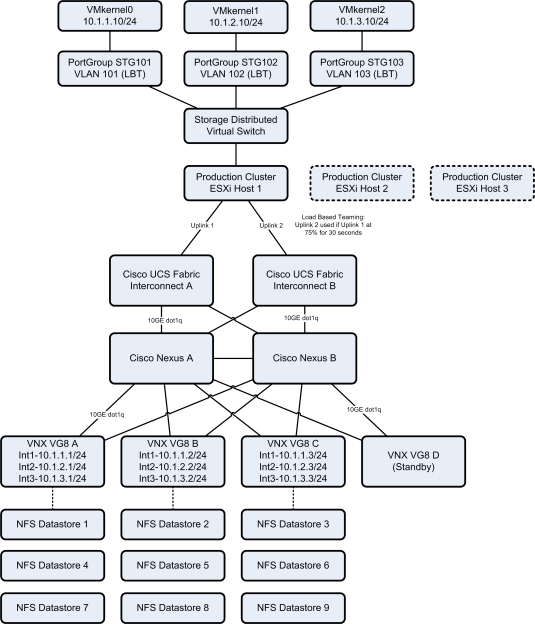

The diagram below provides an overview of the use case.

The use case mechanisms are:

- VDS LBT – Load balancing of NFSv3 TCP sessions

- NFSv3 volumes – Providing Datastores and vDisks larger than 64TB

- 10GE switching – Cost reduction of shared storage and the reduction of Fiber Channel in the Data Center

- Non-routed IP Storage Subnets with the minimum of switch hops – Low latency access to storage

Conceptual Model

- Requirement 1: VMDK files larger than 64TB

- Requirement 2: Datastores larger than 64TB

- Requirement 3: Converged 10Gb/40Gb Ethernet for Storage and LAN traffic

- Requirement 4: Cost effective N+1 scale-out infrastructure

- Constraint 1: EMC Symmetrix VMAX Storage

- Constraint 2: EMC VNX VG8 NAS Gateways

- Constraint 3: Cisco Unified Compute System

- Constraint 4: Cisco Nexus 5020 Core Switches

- Assumption 1: At least two vNICs per host

- Assumption 2: Correctly sized, redundant, Enterprise LAN switched network

You need to consider:

- NAS Gateways bolted to the front of Monolithic storage or separate NAS boxes with DAS?

- Physically separate switched network for NAS?

- 10Gb or 1Gb networks?

- Jumbo frames?

Scale-Out Enterprise Network Attached Storage (NAS)

Design Decision 1: Size and Number of NFS Volumes to be presented as Datastores?

Design Decision 2: Number of NAS Filers to use?

Design Decision 3: NFS Export to restrict host access

Design Decision 4: VAAI?

Depending on the Datastore requirements, you can design Datastore sizes larger than 64TB (VNX supports 255TB, NetApp supports 256TB), which supercedes the inherent limitations of SAN storage with vSphere. At least two NAS filers should be used, assuming they support virtual interfaces required for LBT to function. NFS Exports will be used to secure each NFS volume from unrestricted host access (important for regulatory compliance). There is no reason not to use VAAI, however a VAAI NAS plugin must be installed upon each host.

Storage DRS and SIOC must also be considered as described in this post.

Configuration: Each NFS volume should be performance optimised with the following command:

“server_mount <server name> -option noprefetch,uncached <vol name> <vol path>”

Virtual Switches

Design Decision 5: Number of Virtual Distributed Switches?

Design Decision 6: Number of Uplinks per VDS?

Design Decision 7: Number of Portgroups (with VLANs) per VDS?

Design Decision 8: Portgroup Teaming?

There should be a VDS dedicated to IP Storage. VDS Load Based Teaming will be used to load balance IP Storage traffic across the vmnics (separate Portgroups). Three or more VM kernels are required. Two or more NAS Engines are required with three virtual filer instances (separate Portgroups) per NAS box. Load balancing occurs across each pair of host vmincs, based upon Uplink load (75% utilisation for more than 30 seconds). If you are using rack mounted hosts, be very careful of using LACP direct to ESXi, carefully consider the pros and cons of what you are trying to achieve and involve your networking team. With this use-case, vPC is used to connect the Fabric Interconnects to the Core LAN Switches and the vNICs presented to the ESXi host are two separate 10GE links with no bonding. To use VDS and LBT you must have vSphere Enterprise Plus licences. End-to-End QoS must also be considered as described in this post.

Configuration: ESXi host advanced network settings for NFS and TCP/IP stack will have to be optimised.

For example (confirmed working settings with Version numbers below):

Also consider disabling “TCP Delayed Ack” as described by Long White Virtual Clouds.

Physical Switched Network

Design Decision 9: vPC?

Design Decision 10: Jumbo Frames?

Design Decision 11: Non-Routed or Routed IP Storage Networks?

Design Decision 12: Minimum Number of Hops?

Design Decision 13: Dedicated NAS LAN Switch?

To “vPC or not to vPC” was discussed in the previous section. Jumbo frames should be enabled for the storage switched network, however, consistent MTU settings must be configured along the entire path for it to function correctly. 10Gb, non-routed IP networks should be configured with a minimum number of switch hops between the host and the NAS hardware for high throughput, low latency communications. If you are not going to implement QoS or you have a 1Gb LAN, then a separate 10Gb Storage LAN Fabric should be considered.

For Example:

Risks

- If you use the EMC VNX VG8 for Datastore backup/recovery with Checkpoints and/or EMC Replication Manager, make sure you test NFS volume performance, since Checkpoints have a performance tax. The more Checkpoints you have, the greater the tax.

- Enabling De-duplication on each NFS volume needs to be considered carefully, it has a performance tax also.

- Enabling Compression on each Meta-Volume (EMC Symmetrix VMAX) that is used by the EMC VNX VG8 should also be considered carefully, it has a performance tax.

- EMC Symmetrix VMAX Storage Groups – make sure your tiers of disks are in separate storage groups with a separate FAST-VP policy

- Do not run NFS on vSphere 5.5 Update 1 until this KB 2076392 is resolved, stick with 5.5 GA until then.

Alternatives

- Two or more NAS boxes (with DAS)

- Core switched network – Clos-type Leaf & Spine

- Hyper-converged solution

- Attach NAS to Cisco UCS as Appliance Ports

Version Matrix

This is a verified configuration that works.

- VMware vSphere 5.1 Update 2 Build 1483097

- EMC Symmetrix VMAX Enginuity Version 5876.268.174

- EMC VNX VG8 Version 7.1.74-5

- Cisco UCS Version 2.1(1a)

- Cisco Nexus OS Version 5.0 (3) N2 (2)

Additional information

- Cormac Hogan NFS Best Practices series

- Wahl Network NFS on vSphere Deepdive series

- VMware vSphere 5.1 documentation

- VMware NAS best practices whitepaper (ESXi 3.5/4)

- VMware vSphere 5.5 Update 1 NFS APD KB 2076392

- Virtual Geek Multivendor NFS post with vSphere

- Long White Virtual Clouds EtherChannel and IP Hash or Load Based Teaming?

- Long White Virtual Clouds TCP Delayed Ack and vSphere IP Based Storage

- Scott Lowe EMC Celerra Optimisations on vSphere

- EMC Documentation for VNX with vSphere

- vcdx133.com Tech 101 – EMC Symmetrix VMAX & PowerPath/VE

- vcdx133.com Evolution of Storage – What is your strategy?

- vcdx133.com EMC FAST-VP & PowerPath/VE with SDRS & SIOC

- vcdx133.com End-to-End Network QoS for vSphere 5.1 with Cisco UCS & Nexus

Great post Rene!

With this volume of data, how are backups & restores catered for against SLAs?

Slightly off topic, but any considerations for data integrity?

Hello Craig,

Thanks for the feedback.

In answer to your question: It depends.

This question needs to be answered: How does the Availability of the services (SLAs) that run on this storage translate to RPO, RTO and MTD (ie. Business Impact Analysis)?

These answers must then be translated to the following design considerations for Recovery (local site – HA and remote site – DR):

* Corruption/Destruction of the physical array (Datastores unavailable) – Switch to other Highly Available system (Anti-Affinity SDRS, separate Datastores & array) of local site and continue running or initiate DR Failover to remote site and continue running

* Corruption/Deletion of Data of the VM (not replicated to HA VM/remote site) – same as above

* Accidental or Malicious Deletion of Data from the VM (replicated to HA VM/remote site)- Toughest to protect against and recover from – have to restore from daily image backup and restore hourly/quarter hour Application or Database consistent incrementals to within the RPO and be running again within the RTO.

If your RPO/RTO is minutes, then you will have to use VADP (eg. EMC Avamar, Commvault Simpana, Veeam) for this to work and test, test & test again to ensure you are meeting your RPO/RTO. If you are using legacy Tape for this amount of data and an RPO/RTO of minutes, then you will break your SLA.